Source: Sina Technology Synthesis

The use of a single camera to achieve blurred photography is nothing new, the previous iPhone XR and earlier Google Pixel 2 have had similar attempts.

Apple's new iPhone SE is also the same, but its camera element is too old, the main credit is still in the algorithm.

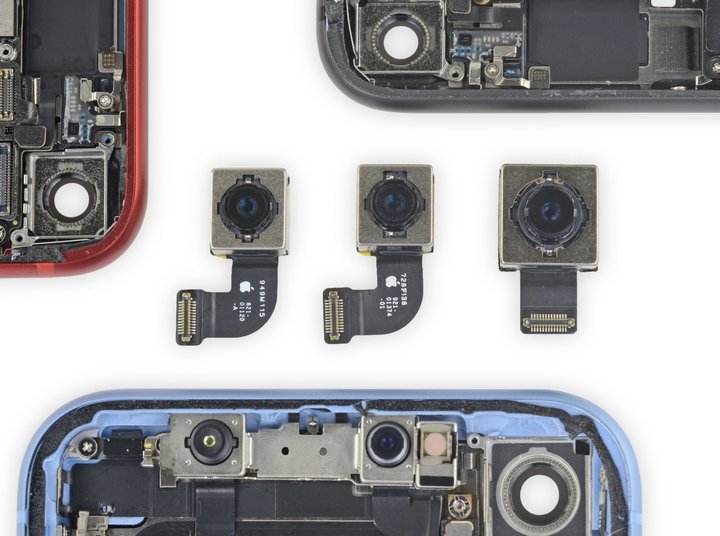

From the disassembly report of iFixit, we can see that some parts in the new iPhone SE are completely consistent with the iPhone 8, even to the extent that they can be used interchangeably-including the 12-megapixel wide-angle camera .

The practice of ‘new wine in old bottles’ is not unusual for the iPhone SE. Back to four years ago, the first generation iPhone SE also applied the appearance of 5s and most of the hardware, so Apple can give a lower price.

Theoretically, when copying the same camera hardware, the camera characteristics of the two should not be much different. For example, iPhone 8 does not support taking small depth of field photos with a clear subject and a blurred background, which is what we often call the "portrait mode".

But when you look at Apple's support page, you will find that the portrait mode that is not supported by the iPhone 8 is supported by the new iPhone SE-even if the rear lens specifications of the two are exactly the same.

Under normal circumstances, taking blurred photos on a mobile phone often needs to be done by dual cameras-just like human eyes, the mobile phone also needs to obtain two images at different angles through two lenses at different positions, and then combine the angles of view The difference estimates the depth of field to achieve background blur and keep the subject clear.

The Plus series on the list, or the X, XS, and 11 in recent years, basically rely on multi-camera systems to complete portrait blur shooting.

So how does the iPhone's front single camera solve? The core lies in the infrared dot matrix projector in the Face ID system, which can also obtain sufficiently accurate depth data, which is equivalent to an ‘auxiliary lens’.

From this point of view, the iPhone SE can take portrait mode photos is very special: first, it does not take multiple shots, second, it does not have Face ID, there is basically no possibility of hardware support.

Apparently, Apple has made some changes that we cannot see at the software level.

Recently, Ben Sandofsky, the developer of the third-party camera application Halide, revealed the technical principles, explaining why the new iPhone SE uses the same single-lens specifications as the iPhone 8, but it can achieve the portrait photo mode that the latter cannot .

They said that the new iPhone SE is likely to be ‘the first iPhone that can generate a portrait blur effect using only a single 2D image’.

You might say that the iPhone XR is also not a single-camera blur. Is n’t SE just copying it?

However, the dismantling situation has proved that the cameras of iPhone SE and iPhone XR are not consistent, which also leads to differences in the technical implementation of the two.

▲ Samsung Galaxy S7 series is the first device that uses DPAF technology on smartphone camera

The most important point is that the camera of the iPhone XR can use dual pixel autofocus (DPAF) technology, which allows it to obtain certain depth data based on hardware.

In simple terms, DPAF technology is equivalent to dividing the pixel on the camera sensor into two smaller side-by-side pixels to capture two photos with different angles, just like our left and right eyes.

Although the angle difference produced by this is not as obvious as that of dual camera, it is still conducive to the algorithm to generate depth data.

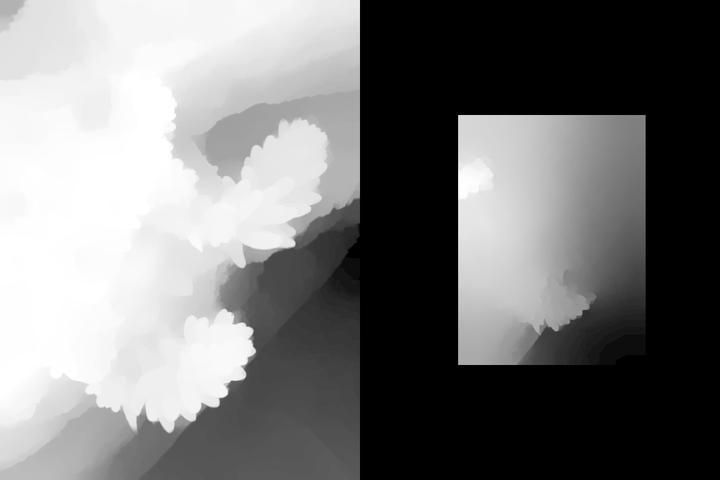

▲ Google Pixel 2, 3 Two disparity maps obtained using DPAF technology are difficult for the naked eye to

perceive, but can still help the image segmentation algorithm to make judgments

Previously, Google also used this technology on Pixel 2, 3 to achieve single-shot blur. On the Pixel 4, because the camera is replaced by a multi-camera specification, parallax detection is significantly more accurate than single-camera.

▲ Let's take a look at the data obtained by the Pixel 4 using two cameras.

As for the new iPhone SE, because its sensors are too old, Halide claims that it cannot rely on the sensors to obtain disparity maps, and basically can only rely on the machine learning algorithm provided by the A13 Bionic chip to simulate and generate depth data maps.

One sentence explanation is that the iPhone SE portrait blur shooting is entirely achieved by software and algorithms.

▲ Take this photo directly with iPhone XR and new iPhone SE

Halide used the iPhone XR and the new iPhone SE to take a picture of the puppy (not a real shot, just to take a picture of ‘one photo’), and then compared the depth data of the two pictures.

They found that the iPhone XR just did a simple image segmentation to pull out the main body, but did not correctly recognize the puppy's ear.

▲ Depth data graph, iPhone XR on the left, new iPhone SE on the right

But on the new iPhone SE, with the new algorithm provided by the A13 chip, we got a depth map that is completely different from XR. It not only correctly recognizes the puppy's ears and overall outline, but also does layered processing for different backgrounds.

This kind of depth map is not 100% accurate. Halide said that the accuracy of the cutout and blurring of the new iPhone SE when shooting non-face-faced blurred photos is not as accurate as when taking portraits.

Especially in the case where some subjects and background images are very blurred, the advantage of multiple cameras will be more obvious at this time.

▲ In this kind of non-face theme, and the subject and background are not clearly separated, the blur of the new iPhone SE

is easy to make mistakes

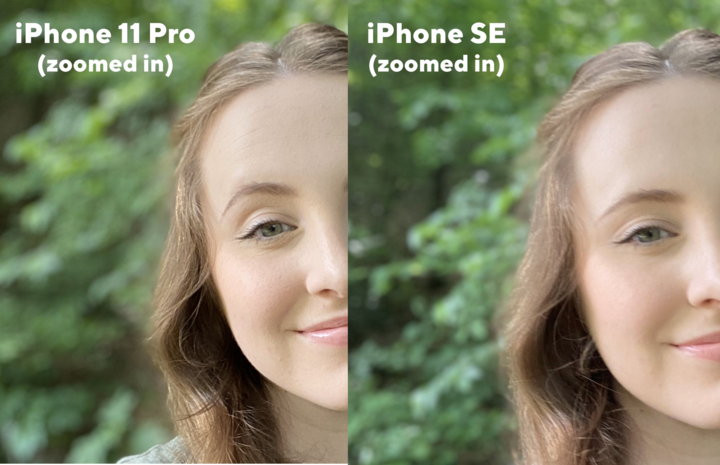

As you can see from this picture, the iPhone 11 Pro equipped with a multi-camera system can not only completely outline the small plants on the log, but also can recognize the distance of the background and make layered processing.

▲ Depth data graph, iPhone 11 Pro on the left, new iPhone SE on the right

On the new iPhone SE, despite the same results of layered processing, the subject and background have been completely fused together. Naturally, the post-blurring process will naturally be much worse than the iPhone 11 Pro.

▲ The actual blurred proofs, iPhone 11 Pro on the left and the new iPhone SE on the right

That's why, when the new iPhone SE uses iOS's own camera app, only when a human face is detected, the "Portrait Mode" can be enabled to take blurred photos. In other cases, an error will appear.

The reason is still related to Apple's algorithm. Halide mentioned a technique called 'Portrait Effects Matte' (Portrait Effects Matte), which is mainly used to find the precise outline of people in portrait mode photos, including details such as hairline on the edge, glasses frame, etc. The subject and background are segmented.

But at present, this set of segmentation technology based on machine learning is more prepared for "shooting people", it can indeed make up for the lack of parallax data on single camera phones such as iPhone XR and iPhone SE, but if the subject The algorithm will also make a judgment error when changing characters from other objects.

As for multi-camera phones like iPhone 11 Pro, you can get parallax data directly through the camera hardware, so they can also use the portrait mode in non-face scenes when using their own camera.

▲ The front lens of the new iPhone SE also supports the portrait mode, and the precision of the face is very high,

and the imaging difference is only in the bokeh effect

Of course, third-party developers can still use things that are not officially supported. Now the Halide app can support iPhone XR, SE to take blurred pictures of small animals or other objects. In fact, it also uses Apple's portrait mask technology to obtain depth maps, and then add its own back-end optimization to achieve.

▲ Using third-party apps like Halide, you can use the new iPhone SE to take blurred photos of non-face subjects

In general, the portrait blur achieved by this new iPhone SE is the limit that can be achieved by software optimization for single-camera phones. Strictly speaking, this is actually due to the A13 chip. If it did not bring the latest machine learning algorithm, the camera experience alone, the SE shooting experience obviously has to be a half.

Therefore, it is still meaningful for smartphones to develop multi-camera systems. We can use ultra-wide angle to widen the field of view, and we can rely on the telephoto lens to obtain non-destructive zoom photos. Augmented reality detection help, these are not only achieved by an OTA upgrade, or the grinding of algorithms.

Of course, blindly bragging and competing for the number of cameras is also annoying. If the hardware only determines the lower limit of imaging, then a set of excellent algorithms can significantly raise the upper limit of imaging, and even re-express the value and value of old hardware. potential.

I do n’t know if we can wait another four years. When the next generation of iPhone SE comes out, will single camera still have a place in the mobile phone industry?

Post time: May-06-2020